Monday Sucks: DPM BMR Restore of Hyper-V Cluster Nodes

First of all - let me tell you that I did not get to this point lightly. As a matter of fact it was quite honestly the LAST damned place I wanted to be. So how did I get here?

A while back Dell, who we purchased our EqualLogic (EQL for short) units from, contacted us about apparently requiring some annual maintenance as part of our service contract. So they email you up out of the blue and basically tell you that you have 30 days to schedule a time with an engineer who will assist you in updating your firmware on your EQL units. Oh and by the way, they want you to update your Host Integration Tools (HIT) Kit on all iSCSI connected servers as well at the same time. So, I went about collecting the information they wanted and getting some MX time setup for a weekend. I understand (and completely agree with) updating firmware and software that fixes big bugs so I don't really blame Dell for wanting this done and quite honestly once a year is not too big of a deal.

So, the MX day comes along and all goes pretty well aside from the fact that it took about 12 hours to get the whole thing done because of the EQL firmware, switch firmware and the long list of servers that needed to be done, not to mention that (per Dell) HIT kit 3.5.1 needs to be uninstalled before moving to 4.0. Long day. However one little issue sprung up. We run Windows Core for our Hyper-V hosts. The tech who was running the show couldn't find the documentation for how to remove 3.5.1 from Core - so the install of 4.0 was run directly over 3.5.1. Turns out... that was a BAD BAD move. Why? Well my Core Hyper-V hosts are basically headless and configured to boot directly from iSCSI not from internal disks. After said 4.0 installation - the system for some reason continues to generate more and more iSCSI connections to the boot LUN until.... the server crashes, the VMs on said node failover (ungracefully) to another host and spin back up. All told my hyper-v hosts crash once a day, at least.

H0w do I know Dell's HIT Kit is to blame? The logs... it's all in the logs. I went from a few Event ID 116 (Removal of device xxxx on iSCSI session xxxx was vetoed by xxxx) events a week to 200+ a day per host .. and the logs started going nuts right after the HIT Kit update reboot.

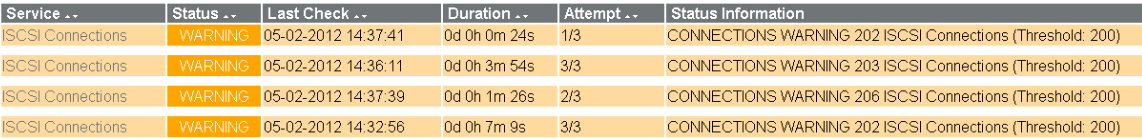

Note: Typically there's about 40-50 per EQL Unit not 200 per unit. When Nagios starts sending me these alarms I'm guaranteed a host crash within minutes.

Anybody else see the pattern that starts to develop at some random time after boot? This could happen 12 hours after boot or 3 hours. No rhyme or reason. Just starts creating new connections to the LUN and never releases the old ones until the system goes down in flames.

Case opened with Dell, spent almost 4 hours on the phone while they had tech after tech look at it and come up with bubkis. At Dell's recommendation I've uninstalled 4.0 and gone back to 3.5.1. I've gone through the registry bit by bit looking for residual 4.0 stuffs. I've spent all kinds of time on technet and google looking for anything I can find to try and solve the issue. No joy. I've sent a multiple DSETs, a couple Lasso files. Nada. The final straw this morning (summary of email conversation - I was much more polite than this):

Tech: Please send more DSETs so we can send to Engineers.

Me: WTF happened to the last ones I sent on Friday?! Nothing has changed except for more crashes.

Tech: Oh... there they are teehee ... let me get them to the engineers.

Me: Like what should have happened two days ago?

... sounds of birds chirping as the emails stopped coming ...

Anyway, part of this was my own stupid. I should have (and dammit I thought about it and then forgot to add it to my checklist) but I should have snapshot the boot LUNs prior to the MX. Whoops. Thankfully I was smart enough to make sure I had BMR backups being done on the Hyper-V hosts (and had tested it before actually trusting it).

The process for running a BMR restore is pretty simple - and even though these are clustered hosts the process remains pretty much the same (even from an iSCSI boot perspective).

The generic process can be found here: http://blogs.technet.com/b/dpm/archive/2010/05/12/performing-a-bare-metal-restore-with-dpm-2010.aspx

The only gotchas with my setup were:

1.I had to transition all the VMs from one host to the other and put the host in MX mode (SCVMM).

2. I had to allow the iSCSI connections to start and then cancel booting from the LUN. I was then prompted to boot from CD. This way the Boot LUN was attached to the server and Windows Setup could see it. Obviously doing the restore setup from Admin Tools wasn't an option on Core.

3. Since my BMR was apparently a little too old (2 weeks?!) I had to disjoin the host from the domain and rejoin it. Not a big deal and the cluster picked the host right back up as if nothing had changed.

4. Only do one host at a time (depends on your cluster's ability to tolerate failure obviously).

Now I get to spend many hours monitoring every little thing to make sure the host stays stable and it doesn't go LUN happy again. Needless to say HIT Kit 4.0 is no longer on my 'update' list. Here's hoping this fixes it...

UPDATE: No Go. This did not fix the issue. At this point I'm at a loss. Going back to a pre-install BMR recovery and still having the same problem -- Which did NOT exist when the BMR backup was taken. Temporary solution now is I'm migrating one host to use physical disks inside the chassis (post on how I'm doing that upcoming) - so at least one host is stable and can host the critical VMs along with the ones that can't handle spontaneous reboots very well (like SQL).